(An excerpt from the upcoming book: Fundamentals of Complex System Sustainment)

It’s amazing what you

can see by looking.

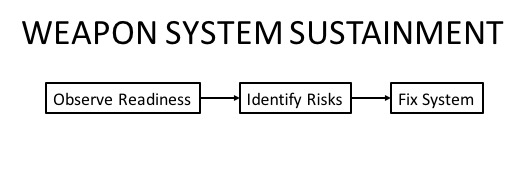

In order to be

prepared for your risk meeting, as discussed in the previous chapter, some

amount of system observation must have occurred. In other words, the “O” of

O-I-F.

Experts know

that observation carries with it the potential to misunderstand or misinterpret.

Preconceived notions of what the shadows on Plato’s cave wall[1]

should mean could get in the way of understanding the truth behind the

estimates. That is, after all readiness metrics are estimated for all parts of

the weapon system, the system is still likely not fully understood.

For example,

when a guidance engineer takes all the data from a test flight, one of the

first steps is to make the best guess as to the actual trajectory. Even the

most accurate global positioning data has enough error in time and position to

affect any subsequent analysis. If the analyst doesn’t even know for sure where

the missile was, how can any kind of accurate assessment of the missile

accuracy be made? How can potential creeping accuracy degradations be found?

This is accomplished by understanding potential observation errors and

characterizing them to provide estimates of confidence in the observations. Small

errors in observation can be tolerated. Larger errors call for more efforts.

This risk

that the system remains incompletely understood is also mitigated by double-checking

between different kinds of observations. This is a kind of “active observing”.

If a set of factory battery tests predict 50% reliability for 10-year-old

batteries, yet 9 missiles have flown with 10-year-old batteries with no

failures, perhaps the factory tests need some improvements.

What should

be obvious in all this is that good observations depend heavily upon excellent

configuration tracking. How can you really know what your system is doing if

you might have some errant component, perhaps an early production lot of

gyroscopes, that consistently creates problems? Yet you don’t recognize the

problem is limited to only those few gyros.

Yet another

complexity must be laid upon the system sustainment function of “observing”.

Your risk meeting participants will rightfully wonder if your observation has

spotted a trend. Does the risk describe an emerging failure mode that will

exponentially degrade the system over the next few years? Although widely

regarded as impossible, the future must be predicted with some certainty.

Unchecked,

all this becomes ridiculously expensive.

It was mentioned how serious a business it is to

extract sustainment funding from decision-makers. This is appropriate, of

course, as these top executives must be good guardians, typically of someone

else’s wealth. So assessment must be efficacious, but it also must avoid using

too much scarce funding.